At tea parties, if they still have them, but most especially

at cocktail parties, which I know are the present craze, you will hear the

word, “significance.”

A guy dressed in faded jeans and a cotton shirt poised in

deep thought with his chin carefully, but gently cradled between the forefinger

and the thumb, as the forefinger carefully caresses the side of the face. He

mutters to the throng of well-dressed beautiful people each carrying their

share of the bubbly or the sanguine color of thought in their glasses, as they

hang on his every syllable. “Significant… I would say.” And the crowd utters a

sigh of relief. You can feel them shiver disquietingly as if the sage might

have gone the other way. What then of their future fortunes? What would have

happened to their parties? What about their stockholdings, their mansions on

the Greek isle or the French Riviera? Why that would have never worked. We

would have to find a better statistician, then wouldn’t we? The pretty lady in

the red gown wonders.

And so in the dark art of probability, there resides the

goose that periodically lays the golden egg of significance. But no one has

cracked open the egg yet; to see what is inside and that is where we lay our

scene…

What does significance mean? This is significant compared to

that? Or this has more significance than that? The veritable definition of

statistical significance is “the extent to which a result is unlikely to be

due to chance alone.”

Pay careful attention to the word “extent,” here. After all, the pride and joy of every

scientist-author is to determine that the value of his or her hypothesis

generated experimental model has relevance. That, using the information from

the experiment, will yield benefits to the consumer, be it in engineering,

mathematics, economics, social sciences and especially in medicine. We will

restrict ourselves to medicine, because that is where everyday lives are being

helped or hurt in such reliance.

The concept of significance is numerically advanced by the

p-value. And p-value, is the fire that heats the water and makes the steam that

turns the engine, is defined as “the

probability that the observed data would occur by chance in a given single null

hypothesis, or the probability of incorrectly rejecting a given

null hypothesis in favor of a second alternate hypothesis.” In an experiment, the

lower the p-value below 0.5 the better the chance that the experiment is not a fluke. And the levels of significance are

10% (0.1), 5% (0.05), 1% (0.01), 0.5% (0.005), and 0.1% (0.001). What? What did

I just say? “FLUKE?” Well actually, to be quite truthful the “p” in the

“p-value” stands for none other than “probability!” Yes you read that right,

“PROBABILITY.” So a p-value of 0.05 means that there is a 5% chance that the

expressed difference is a “FLUKE.”

The “p” is determined in this formula {p = 2(1- cumulative

distribution function x sample size)}.

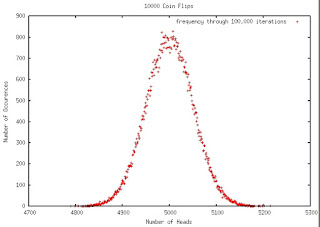

In a coin toss experiment, where the coin toss would yield

“heads” or “tails” The probability of two successive “heads” in two flips =

(1/2)^2 = 0.25 and that is certainly possible and happens all the time that you

get two “heads” or two “tails” with two flips. Now doing ten flips in a row the

probability becomes much smaller 1(1/2)^9 = 0.002 which means a 0.2% chance.

That is how probabilities are ascertained through straightforward arithmetic.

Fluke in this argument, based on new drug evaluation against

a standard drug, is rendered as such: If two drugs for instance are being

compared and the experimental drug shows benefit against the standard drug by a

certain margin that the probability of that benefit is outside the realm of

chance, then the experimental drug is considered significant in its benefits

compared to the standard drug. And that is why after the clinical trials are

over and a lot of money has been spent over the “new great miracle drug” the

reality in the doctor's clinic is, shall we say, different!

But, and here is the devil in this minor detail. In most

medical studies, the experts use the cautionary brackets of Confidence Interval

(CI). And Confidence Interval is none other than the limits of the significance

based on the number of the sample (n) that expresses fairly confidently, within

two standard deviations, that the observed benefits of the experimental drug were

within the confines of 95% probability. In other words, the experimental drug

induced benefits are true given the probable accuracy of the experiment 95% of

the times, and by extension, a failure to uphold that possibility in the other

5% of the times. CI is determined: Confidence = Signal/Noise x square root

(Sample size). And this is all predicated

on the assumption that the model follows the Normal Distribution or as it is

called the “Bell Curve,” or Gaussian Curve.”

You might ask if you are inquisitive, what happens in the

5%? Well those are the outliers. And these outliers are considered so far out

of the dome of certainty that they need not be counted against. What?

Yes! Its true!

The outliers of 2.5 % on either side of the normal curve

(that is if the curve is not skewed to either side), the grand old dame called

“Bell Curve” rarely considers those little tails on either side. For you see,

if we were to start considering those outliers in the studies, we would have to

increase the number of people who would have to take the experimental drug and

compare them to an equal number of those taking the standard drugs greatly. So

the little (n) would become the big (N) if we were to test the drug in all of

the 7 billion people on the planet.

A word on skew ness: The two 2.5% tails can be minimal on one side of

the bell or the other or fat on both sides if the outliers are more than 5%.

These so-called fat tails can out-maneuver and out-distance any fast running

p-value.

Ultimately rationalizing ourselves into this form of

accuracy is the best defense against any argument. Incidentally, most of the

times a smaller “n” shows a flattened “curve” or platykurtosis, while a bigger

“N” gives us a more tenting curve called leptokurtosis. The difference being that

the standard deviations become smaller with a leptokurtotic curve and the tails

get thinner and smaller with a bigger “n.”.

And that dear reader is the problem. Since the significant

p-value is based on a probability of 95% accuracy, most of the times, which

says that there is that possibility that the entire experiment could be a

potential failure? With the outliers, left alone, wearing the dunce hat in the

corner, the 5%, or 4% or 3% or 2% or even 1%, no matter how significant the

value it is, it will never be 100% accurate.

In the end significance is a probability expression with the

caveat that there may be a likelihood that the whole experiment is a failure,

the drug is a failure and the author’s scientific treatise is as useless as the

1s and 0s it has accumulated. I am harsh, am I? Not when some experts sit on

their elbows peering over there coke bottle lens eyeglasses and with their

hands clasped, their spine hunched in ominous fashion like the bird of prey,

pronouncing in exactitude the certainty of their findings. And in the future

when proven wrong, they search to identify other targets within the failure to

prove their point. For failure is not an oprion! The parties must go on and the

Rodin stance must not be trifled with, for therein in that perfect thoughtful,

intellectually nuanced moment lies the mystery of what hides behind the real

scientist’s eyeglasses.

(from art5play)

The ideas that prevail in science are unique and some

experiments do end up with the future love and fanfare by delivering what they

promise, but in these heady days where publish or perish has taken on a whole

new meaning of selling nonsense as sensible science and plotting a forest of

data and curves made of famous names, tables that defy logistics and graphs

that would make Excel blush, life is being lived in the fast and furious lane.

So next time, you say this is “significant” or you exercise

the intellect of p-value because there are a couple of zeros after the decimal,

please understand, it is tailored to the eye of the beholder.

No comments:

Post a Comment